|

Hi there, thanks for visiting my website! I am a Ph.D. Candidate at SIGS of Tsinghua University, where I am fortunate to be advised by Prof. Shao-Lun Huang. Prior to that, I obtained my bachelor's degree (with honors) in computer science and technology at Xi'an Jiaotong University (2019-2023), and had wonderful time conducting research at the LUD lab, where I worked closely with Shangbin Feng, and was advised by Prof. Minnan Luo and Prof. Qinghua Zheng. Currently, my research interests lie in applying information and statistical theory to analyze, interpret, and design machine learning algorithms. In particular, my current work focuses on transfer learning and parameter-efficient fine-tuning of large models (e.g., LoRA). I also have prior research experience in social network analysis, anomaly detection, and graph neural networks.

Email: CV / Google Scholar / Twitter / Github |

|

|

|

Currently, my research interests lie in applying information and statistical theory to analyze, interpret, and design machine learning algorithms. In particular, my current work focuses on transfer learning and parameter-efficient fine-tuning of large models (e.g., LoRA). I also have prior research experience in social network analysis, anomaly detection, and graph neural networks. |

|

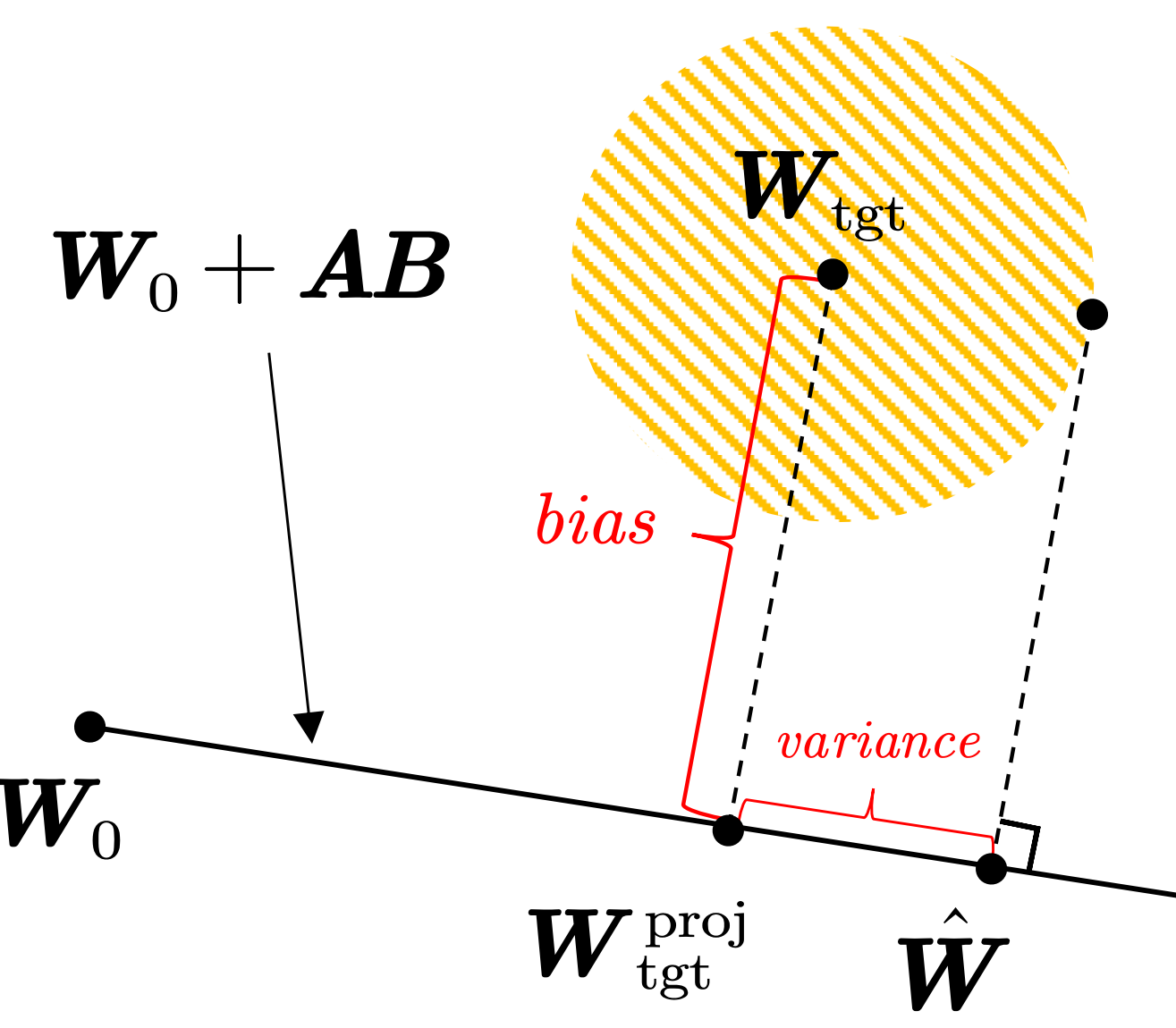

Qingyue Zhang*, Chang Chu*, Tianren Peng, Qi Li, Xiangyang Luo, Zhihao Jiang, Shao-Lun Huang Preprint. Under review We propose LoRA-DA, a theoretically grounded and data-aware initialization method for LoRA. Based on asymptotic analysis, our framework decomposes fine-tuning error into a variance term (from Fisher information) and a bias term (from Fisher-gradient), yielding the optimal LoRA initialization. Experiments across multiple benchmarks show that LoRA-DA consistently improves accuracy and convergence speed over previous LoRA initialization methods. |

|

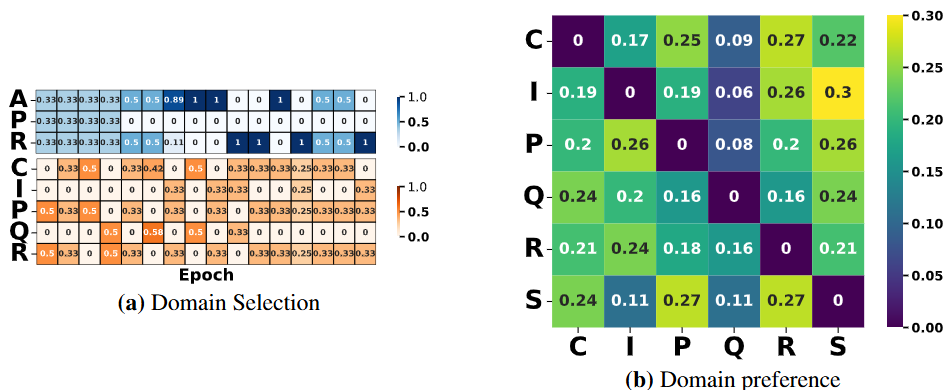

Qingyue Zhang*, Haohao Fu*, Guanbo Huang*, Yaoyuan Liang, Chang Chu, Tianren Peng, Yanru Wu, Qi Li, Yang Li, Shao-Lun Huang Proceedings of NeurIPS, 2025.(CCF-A) code We propose a theoretical framework that answers the question: what is the optimal quantity of source samples needed from each source task to jointly train the target model? Specifically, we introduce a generalization error measure based on K-L divergence, and minimize it based on asymptotic analysis to determine the optimal transfer quantity for each source task. Additionally, we develop an architecture-agnostic and data-efficient algorithm OTQMS to implement our theoretical results for target model training in multi-source transfer learning. |

|

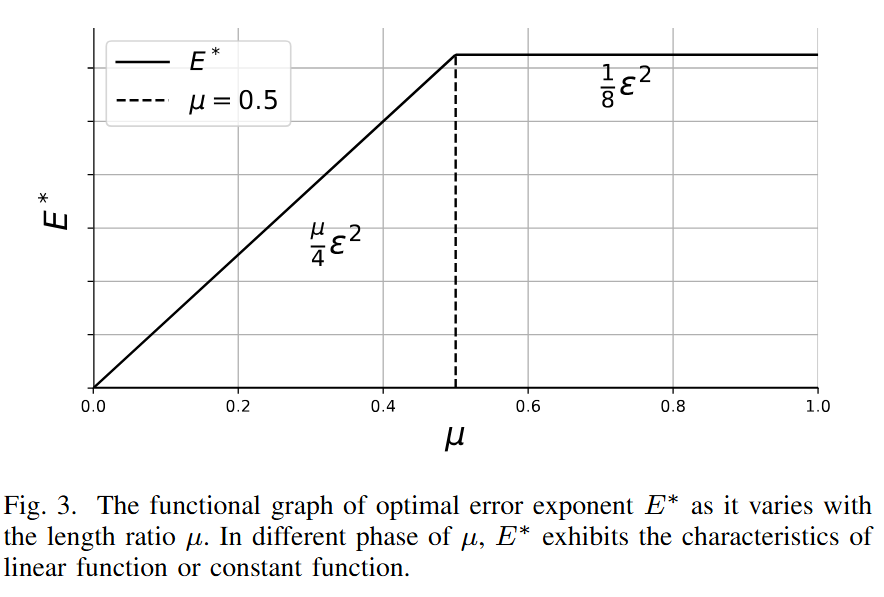

Qingyue Zhang, Xinyi Tong, Tianren Peng, Shao-Lun Huang Proceedings of ISIT, 2025.(Tsinghua-A & TH-CPL-B) We studied fixed-length hypothesis testing where the number of training samples is set in a fixed ratio to the number of testing samples. We derived the optimal error exponent and its corresponding decision rule, and revealed a phase transition determined by this ratio: when the training portion exceeds one half, the error exponent reaches the classical Chernoff information; when it is below one half, the exponent decreases linearly. This establishes a principled benchmark for machine learning, clarifying how the balance between training and testing samples influences achievable performance. |

|

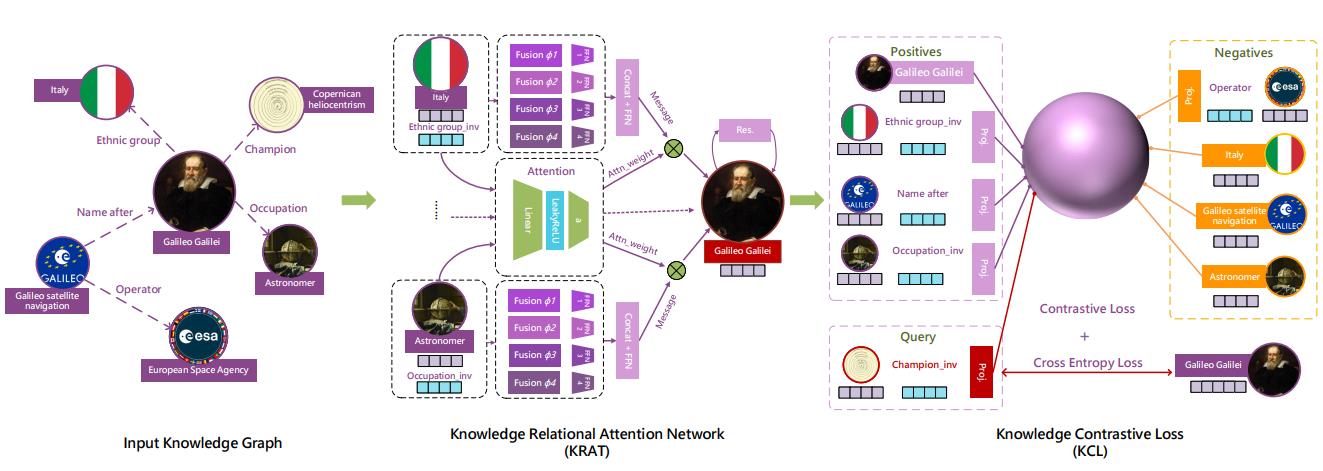

Zhaoxuan Tan, Zilong Chen, Shangbin Feng, Qingyue Zhang, Qinghua Zheng, Jundong Li, Minnan Luo Proceedings of The Web Conference (WWW), 2023.(CCF-A) code / talk We adopt contrastive learning and knowledge relational attention network to alleviate the widespread sparsity problem in knowledge graphs. |

|

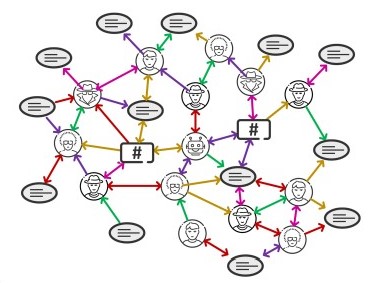

Shangbin Feng*, Zhaoxuan Tan*, Herun Wan*, Ningnan Wang*, Zilong Chen*, Binchi Zhang*, Qinghua Zheng, Wenqian Zhang, Zhenyu Lei, Shujie Yang, Xinshun Feng, Qingyue Zhang, Hongrui Wang, Yuhan Liu, Yuyang Bai, Heng Wang, Zijian Cai, Yanbo Wang, Lijing Zheng, Zihan Ma, Jundong Li, Minnan Luo Proceedings of NeurIPS, Datasets and Benchmarks Track, 2022.(CCF-A) website / GitHub / bibtex / poster We present TwiBot-22, the largest graph-based Twitter bot detection benchmark to date, which provides diversified entities and relations in Twittersphere and has considerably better annotation quality. |

|

|

|

Tsinghua University

Tsinghua Shenzhen International Graduate School 2023.09 - present Ph.D. in Data Science and Information Technology (Belongs to the first-level discipline of Computer Science and Technology) Advisor: Prof. Shao-Lun Huang |

|

Xi'an Jiaotong University

2019.08 - 2023.07 B.E. in Computer Science and Technology (with honors) Advisor: Prof. Minnan Luo |

|

Northeast Yucai School

2016.09 - 2019.6 Mathematics Experimental Class |

|

|

|

School of Computing Summer Workshop @ National University of Singapore Research Assistant 2021.05 - 2021.07 Working on a project that aims to detect twitter bot through Graph Neural Networks. Advisor: Prof. LEK Hsiang Hui |

|

Luo lab Undergraduate Division (LUD) @ Xi'an Jiaotong University

Member 2021.09 - 2023.07 Conducted research on various topics including social network analysis, anomaly detection, few-shot learning, natural language processing. Advisor: Prof. Minnan Luo |

|

|

Template courtesy: Jon Barron. |